How to Design a Successful SemTech PoC

2019-01-29

0.1 Workshop Overview

- Session 1 - Model Creation

- Session 2 - ETL Process

- Session 3 - Data Integration & Visualization

- Session 4 - Demonstrators

1 Typical Use Case

1.1 Steps in Typical Use Case

What is a typical use case for a PoC?

- Start with a question we want answered

- Have some messy data that partially answers the question

- Piece of the picture is missing but we can find it in LOD

- Create abstract model presenting the ideal data

- Transform messy sources from tabular to graphical form (ETL)

- Merge sources into a single dataset

- Further transformation to match data to our ideal data model

- Use finalized dataset to get answers

2 Our Use Case

2.1 Our Use Case

2.1.1 Nepotism in Hollywood

. . .

nepotism /ˈnɛpətɪz(ə)m/

The practice among those with power or influence of favouring relatives or friends, especially by giving them jobs.

. . .

Mid 17th century: from French népotisme, from Italian nepotismo, from nipote ‘nephew’ (with reference to privileges bestowed on the ‘nephews’ of popes, who were in many cases their illegitimate sons).

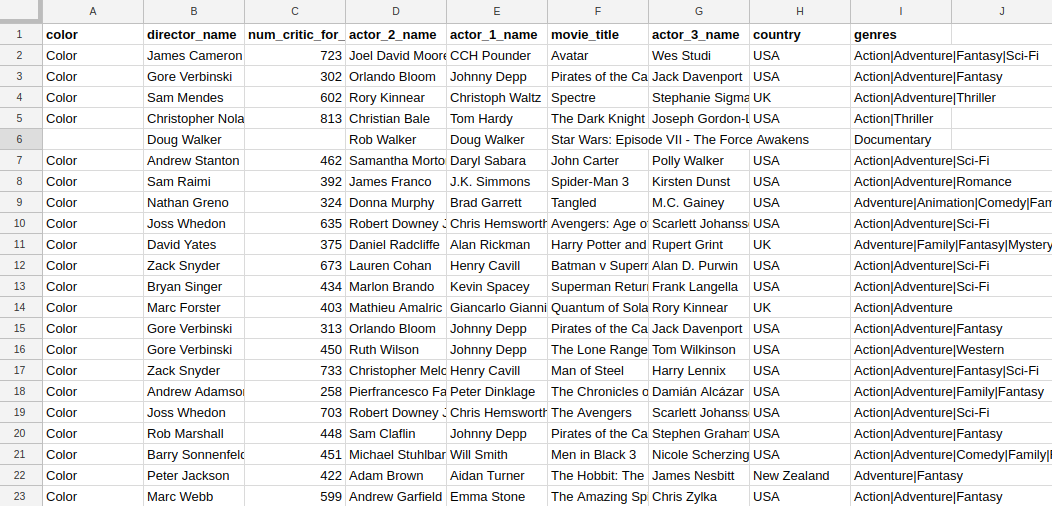

2.2 Simplified IMDB dataset

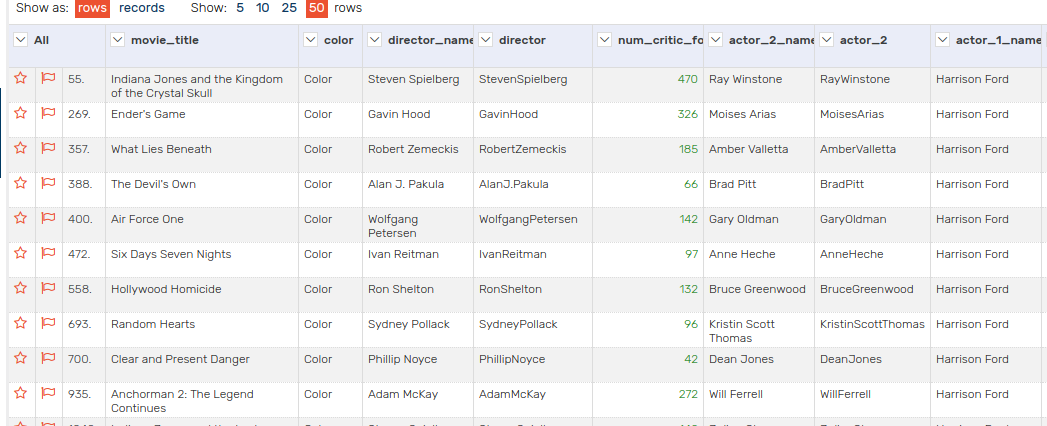

Our dataset is a simplified version of the public IMDB dataset

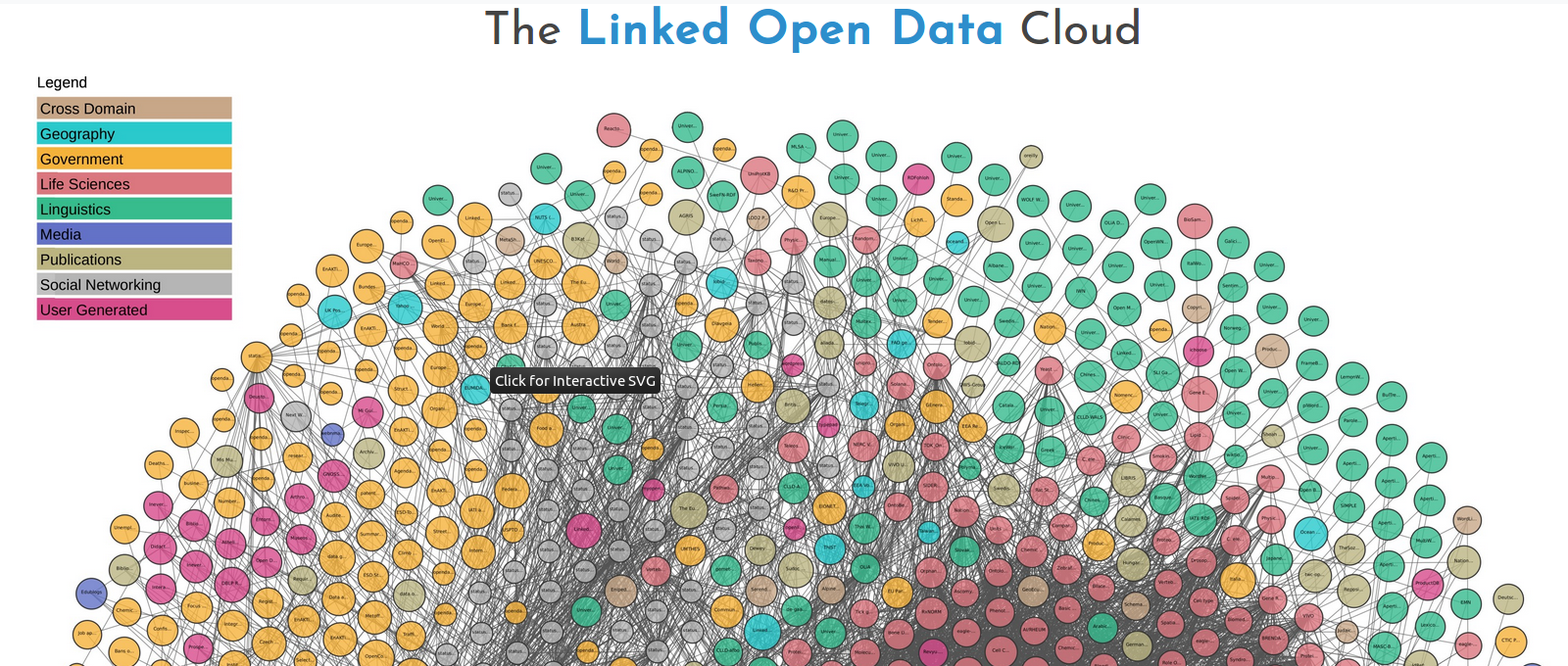

2.3 Sources for Semantic Integration: LOD Cloud

2.4 Sources for Semantic Integration: Datahub

2.5 Sources for Semantic Integration: Google Data Search

https://toolbox.google.com/datasetsearch

- Newest development

- Not linked data but can easily be converted

- Very rich

- Growing very quickly

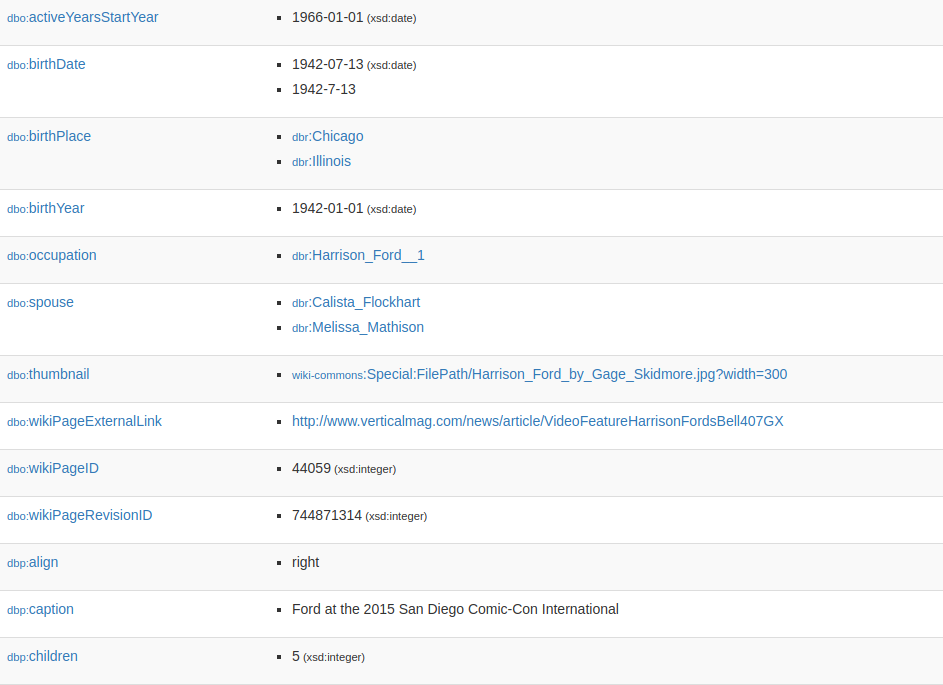

2.6 Sources for semantic Integration: DBPedia

3 Analysis

3.1 Analyse and document peculiarities of source data

- number of records

- coverage of features (i.e. how many missing features)

- range of numeric features

- range of date features

- repetitive values in text features

4 Auxiliary Resources

4.1 Select existing auxiliary resources relevant to use case

- FOAF (Friend of a Friend)

- OWL

- Movie Ontology (not needed for this POC)

- Others to consider

4.2 Movie Ontology

5 Perform semantic conversion (ETL procedure)

5.1 ETL Basics

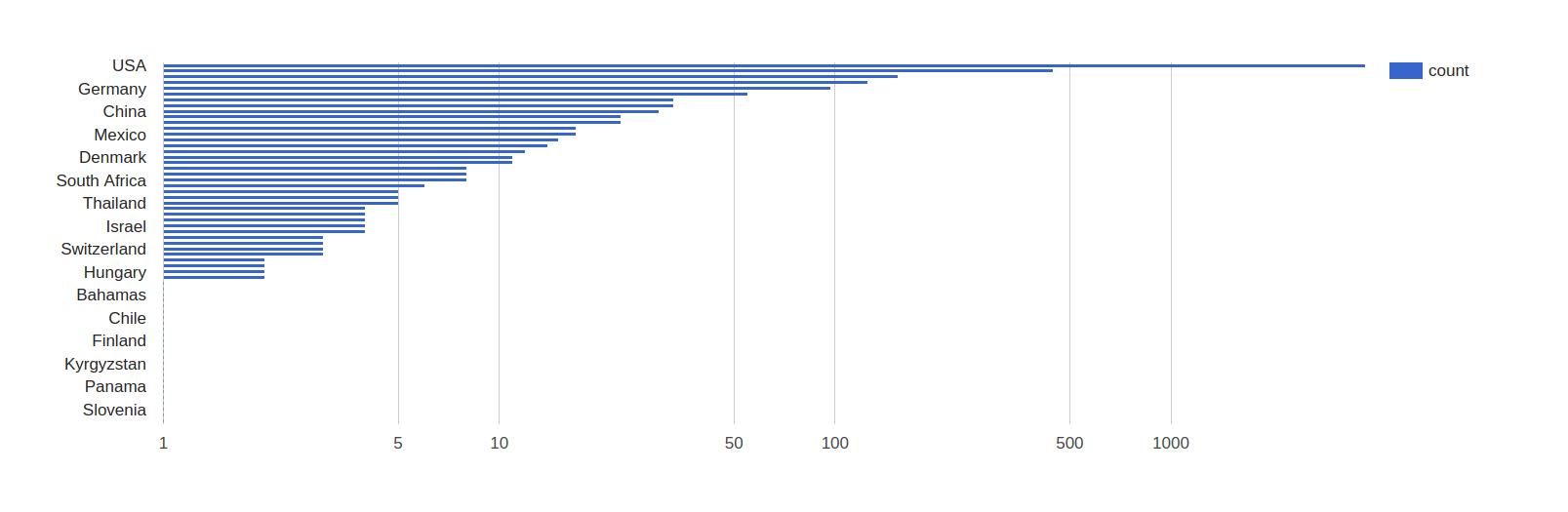

5.2 Normalise values

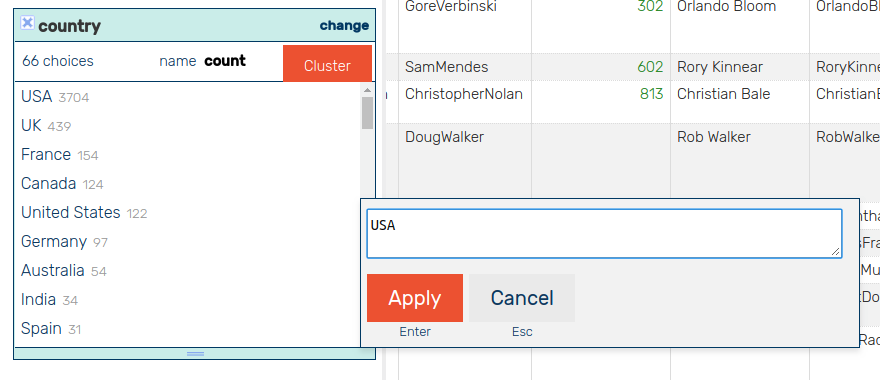

OntoRefine text facets allow quick bulk-editing of values

United States is normalised to USA in 122 cells

5.3 Create new columns

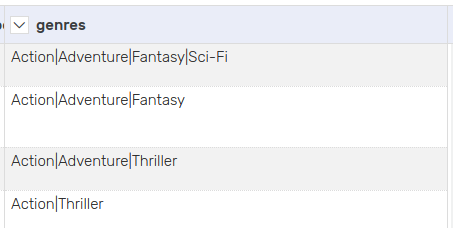

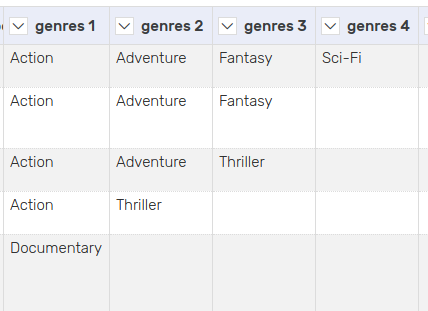

Split columns according to a separator character

5.4 Urlify

Edit the text in the cells

Remove whitespace so that the string can be used in a url/iri

5.5 Reconcile

Use a reconciliation service to match strings to real world objects.

Bulgaria > https://www.wikidata.org/wiki/Q219

5.6 Tabular to Linked Data

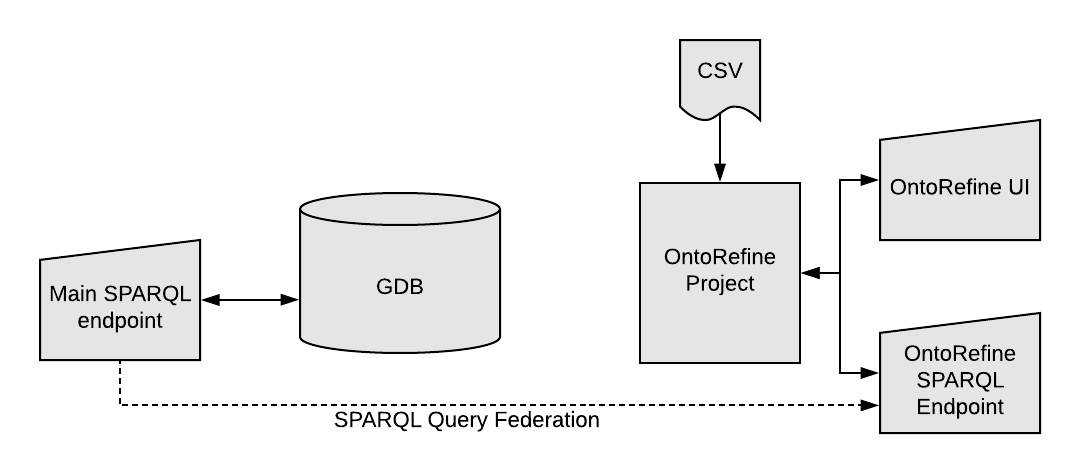

Moving from tabular data to linked data

5.7 Tabular to Linked Data

Here is what our cleaned up table looks like…

5.8 Tabular to Linked Data

… but here it is transformed into RDF.

5.9 Tabular to Linked Data

6 Data Integration

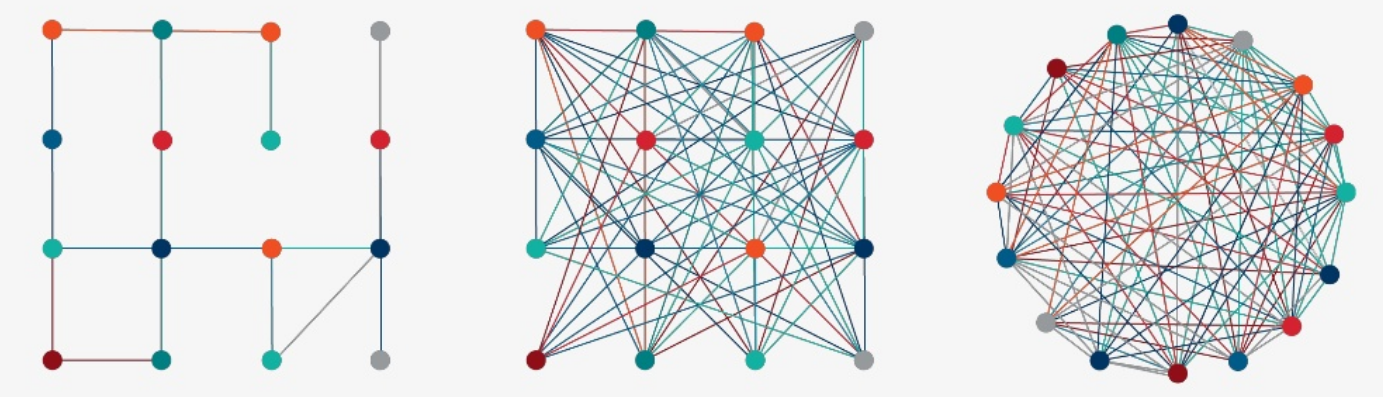

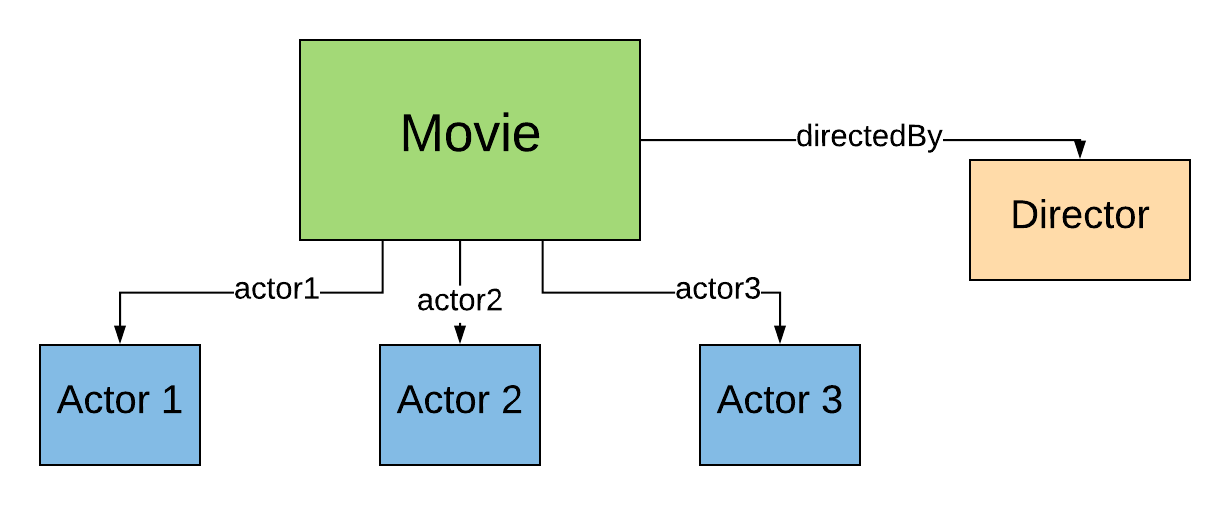

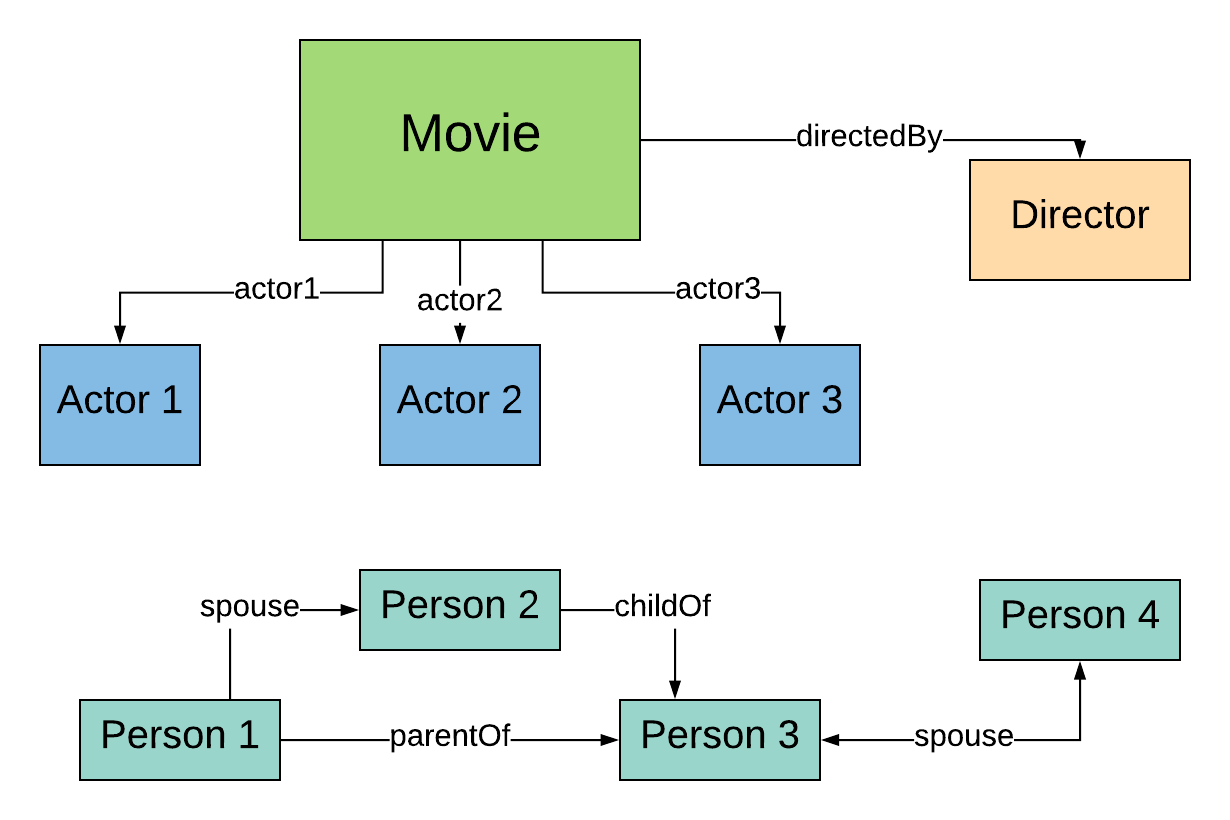

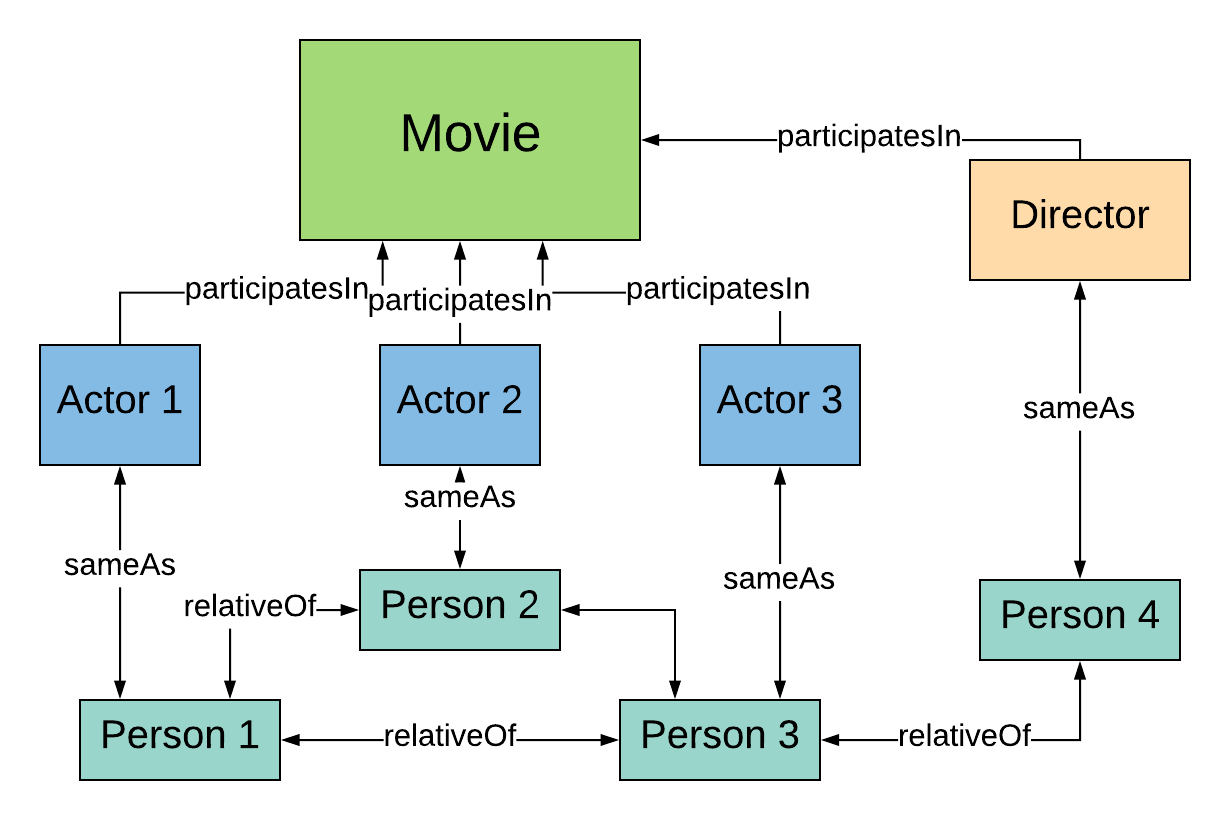

6.1 Initial data model

Output from our ETL procedure

Does this model contain all the data we need?

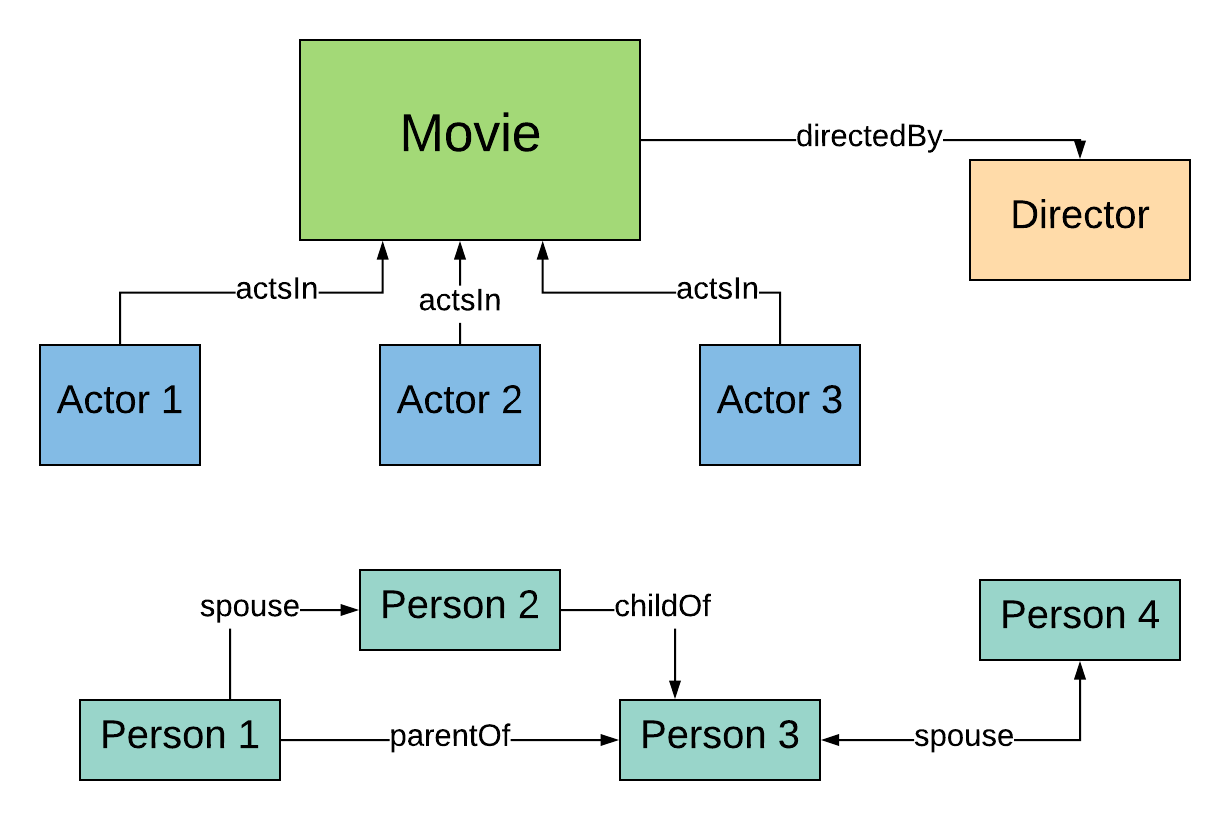

6.2 Expanding the initial model

Incorporating data from an additional data source.

Can we simplify things?

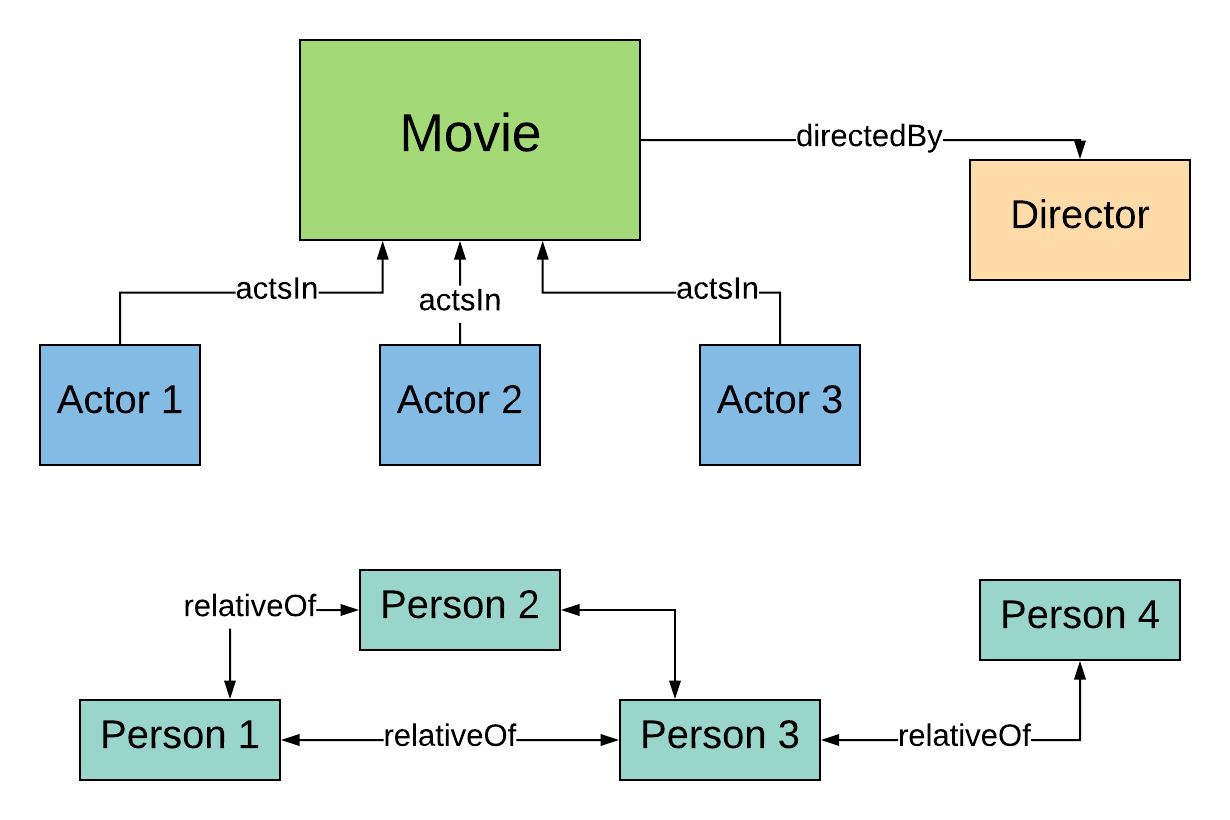

6.3 Creating a new property

Single symmetric relation to use in a straightforward manner.

Can we simplify things further?

6.4 Creating a second new property

Three relations transformed into a single one.

But we are still working with two disconnected parts.

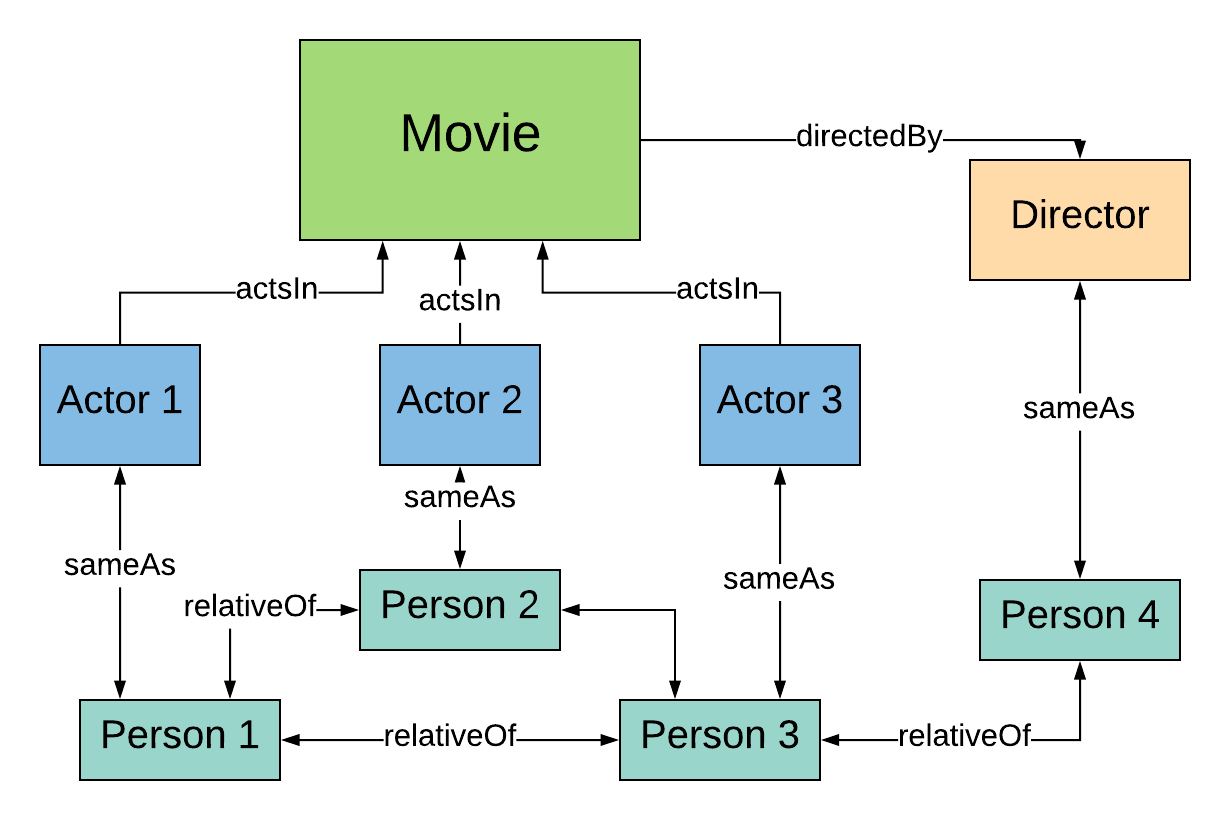

6.5 Connecting the dataset

Now we have everything we need to ask our question.

What if we want to ask a more complex question?

6.6 Changing the model

At later stages we can rework the model which will then require corresponding changes to the procedure.

7 Ontotext GraphDB Visualization

7.1 Google charts

Visualize data in google charts in GDB

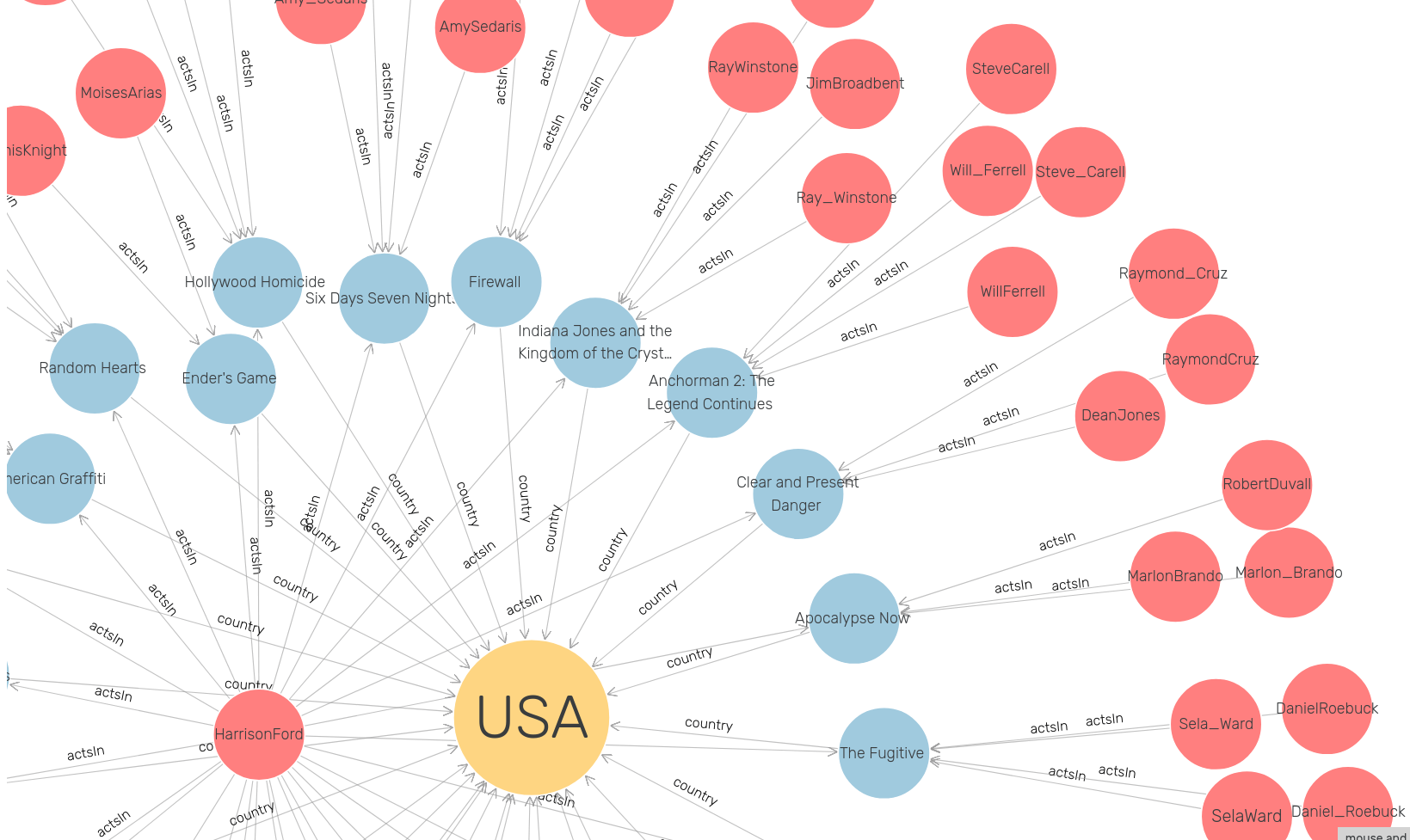

7.2 Visual graph

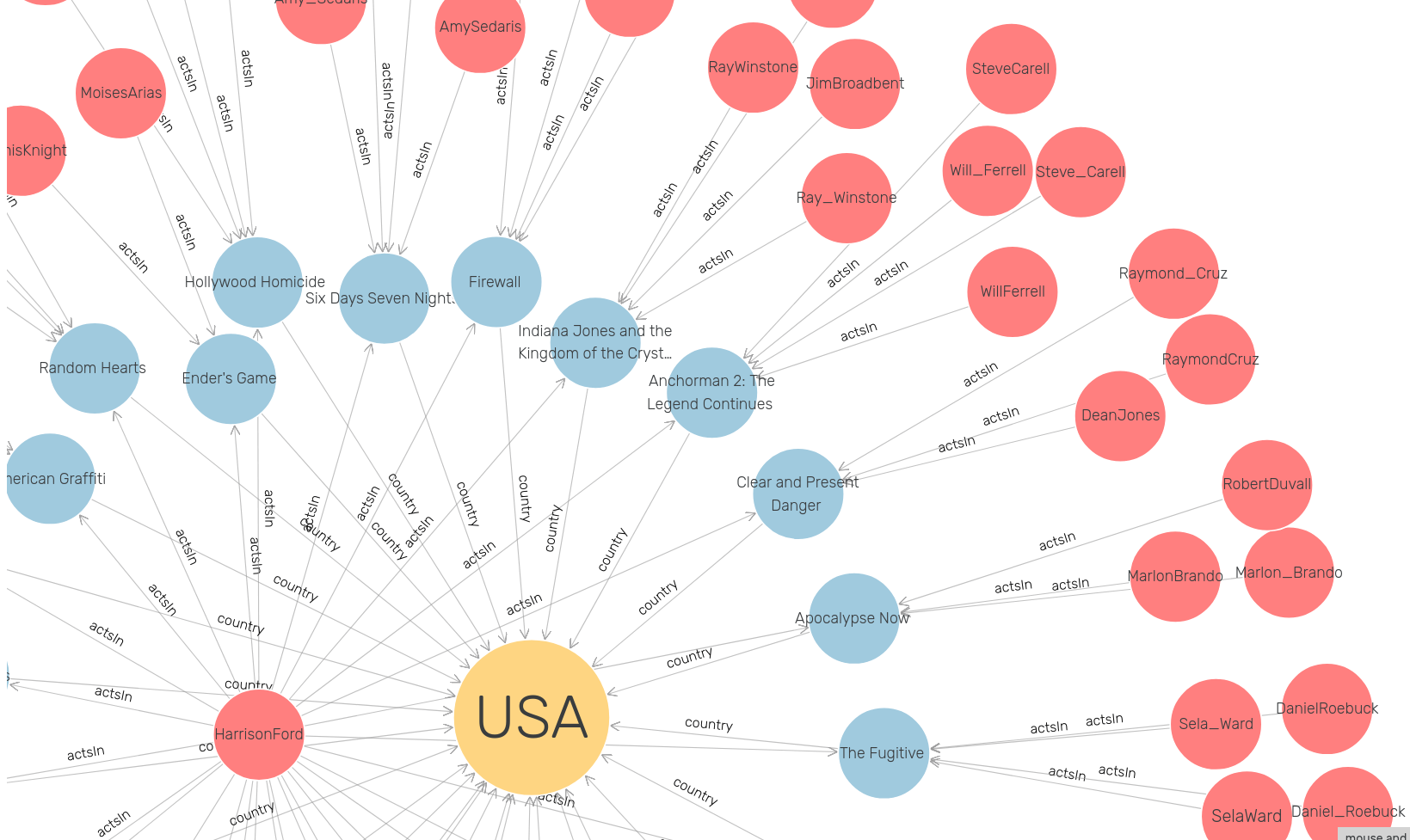

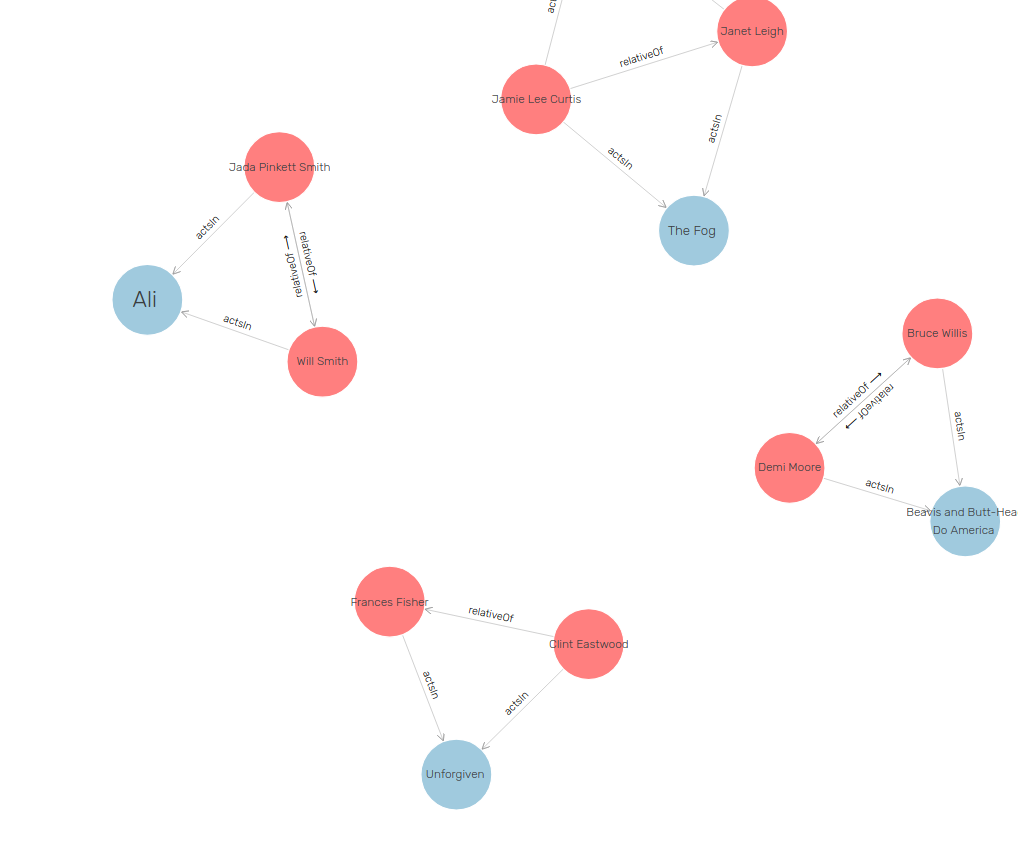

Highly configurable network visualisation using SPARQL

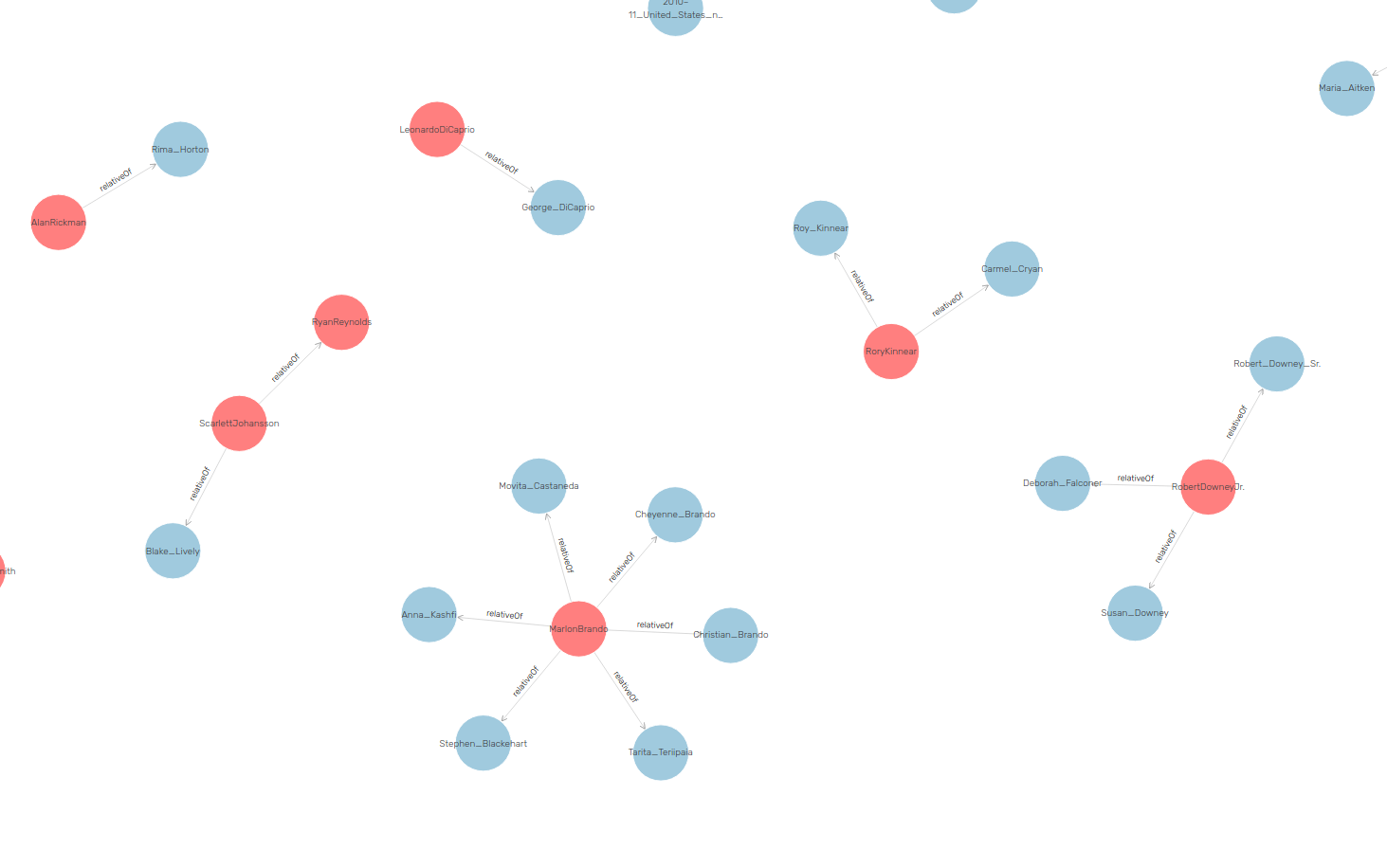

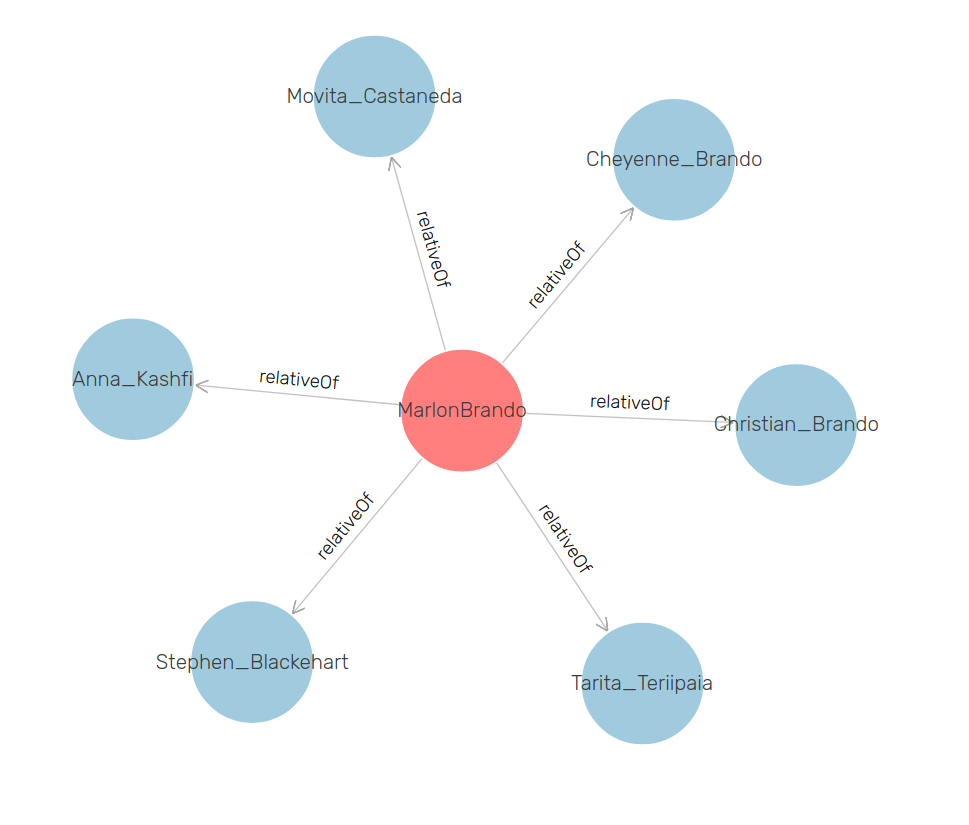

7.3 Visualize family Relations

7.4 Visualize family Relations 2

7.5 Is there nepotism in Hollywood?

7.6 Break

Download materials:

http://presentations.ontotext.com/etl-files.zip

Download and install GraphDB-free

7.7 Building a Semantic POC - Steps

- Start with a question we want answered

- Have some data that partially answers the question

- Piece of the picture is missing but we can find it in LOD

- Create abstract model presenting the ideal data

- Transform sources from tabular to graphical form (ETL)

- Merge sources into a single dataset

- Further transformation to match data to our ideal data model

8 Part II: ETL Process with OntoRefine

8.1 Short Break

Load post-ETL repository:

https://presentations.ontotext.com/movieDB_ETL.trig

Download SPARQL queries for next section:

9 Parth III: Transformation and Visualization with GraphDB

10 Part IV: Demonstrators

10.1 FactForge

10.2 Reconciliation

10.3 Questions

@ Semantic PoC Training